A US Lawrence Livermore National Laboratory (LLNL) led initiative has demonstrated that exascale supercomputing can meet grid operator requirements in emergency situations.

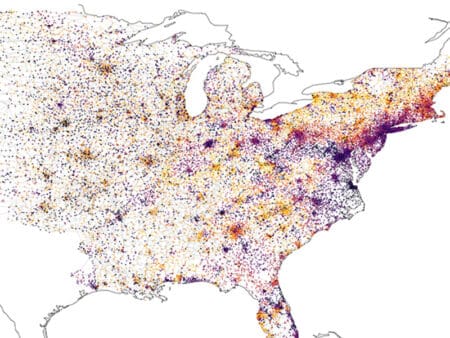

With increasing levels of variable renewable energy generation being added, the management of the grid is becoming increasingly complex – and especially so in the case of extreme weather events or other disasters, natural or otherwise, requiring increasingly advanced modelling and computational techniques.

While investigations are starting on the use of quantum computers for certain power grid problems, the next generation of supercomputers emerging are exascale computers, which bring a significant increase in performance able to perform more than a billion, billion or a quintillion operations per second – or 1 exaflop in computing parlance.

In the initiative, part of the ExaSDG project, LLNL-developed software capable of optimising the grid’s response to potential disruption events under different weather scenarios was run on the Oak Ridge National Laboratory (ORNL) Frontier supercomputer, believed to be the current fastest in the world.

Have you read?

US NREL investigates quantum computing in grid operations

Cybersecurity: Don’t be a sitting duck for energy sector hackers

The open-source optimisation solver, HiOp, was run on 9,000 nodes of the Frontier machine.

In the simulation, the researchers were able to determine safe and cost-optimal power grid setpoints over 100,000 possible grid failures and weather scenarios in just 20 minutes.

In it security-constrained optimal power flow was emphasised, as a reflection of the real-world voltage and frequency restrictions the grid must operate within to remain safe and reliable.

For comparison, system operators using commodity computing hardware typically consider up to only about 100 hand-picked contingencies and up to 10 weather scenarios.

LLNL computational mathematician and principal investigator Cosmin Petra says the goal was to show that the exascale computers are capable of exhaustively solving this problem in a manner that is consistent with current practices that power grid operators have.

“Because the list of potential power grid failures is large, this problem is very computationally demanding. We showed that the operations and planning of the power grid can be done under an exhaustive list of failures and weather-related scenarios.”

With this the software stack could be used for example, to minimise disruptions caused by hurricanes or wildfires, or to engineer the grid to be more resilient under such scenarios.

A key issue for the wider use of the optimisation software is the cost benefit for grid operators.

Petra says in a statement that while a cost-benefit analysis was not done due to confidentiality restrictions and is not yet known, it is likely that a 5% improvement in operations cost would justify a high-end parallel computer, while anything less than 1% improvement would require downsizing the scale of computations.

Other participants in the project were the National Renewable Energy Laboratory (NREL) and the Pacific Northwest National Laboratory (PNNL).

With the demonstration completed, the LLNL research team hope to engage more closely with power industry grid stakeholders and draw in more HiOp users for optimisation with the goal of parallelising those computations and bringing them into the realm of high performance computing.

ExaSGD project

The ExaSGD project is developing algorithms and techniques to address the challenges of maintaining the integrity of the grid under adverse conditions imposed by natural or human-made causes.

ExaGO, the power system modelling framework, the latest version of which was released in February 2023, is the first grid application to run on Frontier.

Frontier was built in partnership with Hewlett Packard Enterprise and AMD and was the first computer to achieve exascale performance in 2022 – at the time being 2.5 times faster than the next fastest ranked.

It is comprised of 74 cabinets with over 9,400 CPUs and almost 38,000 GPUs along with 250PB of storage and achieves its speed essentially with parallel processing of information.